Audio deepfakes: Celebrity-endorsed giveaway scams and fraudulent investment opportunities flood social media platforms

Bitdefender Labs has been keeping up with the latest modus operandi of cybercrooks who adapt emerging technologies to siphon money from consumers.

Artificial intelligence is just one of the many tools that help in the creation and successful dissemination of online schemes to extort money and sensitive information.

This paper focuses on voice cloning (audio deepfakes) schemes and how they are proliferated via social media to trick unsuspecting victims.

Before delving deeper into the main subject of our research, we need to offer some insight into how voice cloning is accomplished and some of its more unorthodox uses.

What is voice cloning?

Voice cloning is the process of using AI tools to create synthetic copies of another individual’s voice. This sophisticated technology uses deep learning techniques to replicate a person’s speech and create highly realistic and convincing audio of a human voice. Compared to synthetic voices generated entirely by a computer using antiquated text-to-speech systems, voice cloning uses the actual voice of another individual to produce a realistic rendition of the original.

Voice cloning in a nutshell:

1. Sample collection - The first step in building a synthetic voice is to collect voice samples from an individual. The more voice data collected, the more accurate the cloned voice will be. It just takes a few seconds of audio from any video on social media or a physical recording of someone talking.

2. Analysis- After the voice data is gathered, the voice cloning software analyzes it to identify unique characteristics of the specific voice, including pitch, tone, pace, volume, and other vocal characteristics.

3. Model training- The voice data is used to train a machine learning model. This complex process involves feeding the voice data into an algorithm that learns to mimic the original voice.

4. Synthesis– Upon completion of the training, the software can generate speech in the cloned voice. This process transforms text inputs into speech outputs that sound like the original voice.

5. Refinement– Individuals can continuously improve the level of quality for the synthesized cloned voice by supplementing more data to the machine learning model and fine-tuning the parameters of the voice cloning algorithm.

Voice cloning has many legitimate uses in education, healthcare and entertainment, including:

- Creating voice-overs and personalized voice assistants

- Used to create voice dubs for actors in movies

- Helping individuals with speech impairments

- Narrating audiobooks

- Creating social media posts

Despite these many lawful uses, voice cloning technology is frequently misused for fraudulent activities. Voice cloning scams use AI to duplicate the voices of trusted individuals to manipulate, deceive, and financially and physically harm others. The malicious practices extend to:

- Malicious actors masquerading as a relative or loved one to steal money. They use voice cloning to create audio messages or recordings to rob victims.

- Virtual kidnapping schemes – cybercrooks extort money from families by pretending to have kidnapped their loved ones.

- Cyberbullying and blackmail

- Various social engineering schemes that use AI-created voices (impersonating famous individuals) to manipulate users into accessing malicious links or transferring money.

Social media platforms – an ideal dumping ground for voice cloning scams

Millions of individuals use social media daily, so it’s no wonder these platforms have become the dumping ground for many emerging scams that employ AI and voice cloning technologies. These two advancements alone are a game changer for scammers, who are quick to exploit them to enhance the reach of their fraudulent schemes.

Now, what gives scammers on social media the edge is not just the sheer number of potential victims, hackable accounts, and personal data that is so easily obtainable. Scammers have learned to tailor their approach using advertisers’ tools and ads to potentially reach billions of users from across the globe.

Concerns among US consumers regarding the exposure to deepfakes and voice cloning have been significant in the past year. So much so that, according to a recent survey by Voicebot and Pindrop, more than 57% of respondents expressed very high concern over the subject.

Bitdefender Labs has closely monitored the phenomena of voice cloning scams in the past weeks, especially on social media platforms such as Facebook.

Our researchers quickly discovered voice cloning scams on Meta’s popular social platform.

Here are some of our key findings:

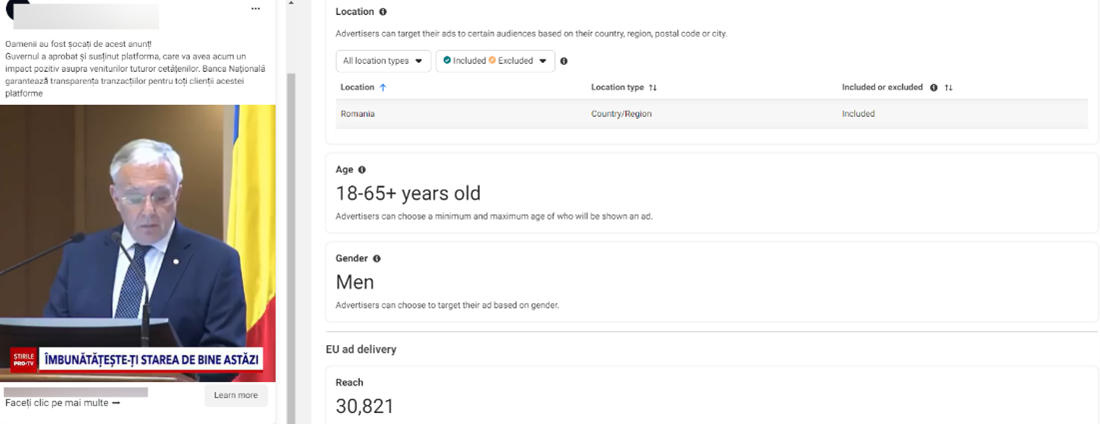

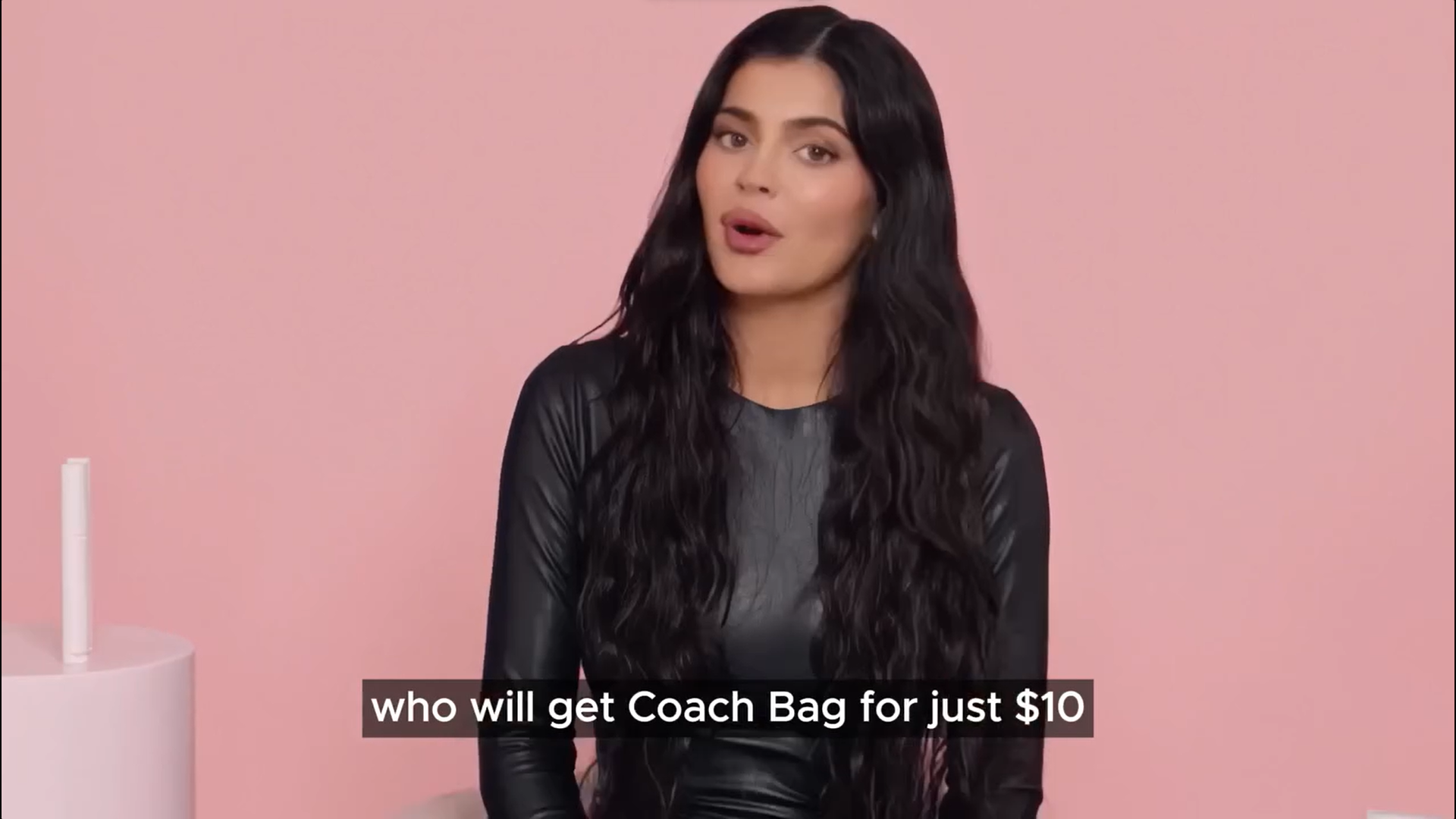

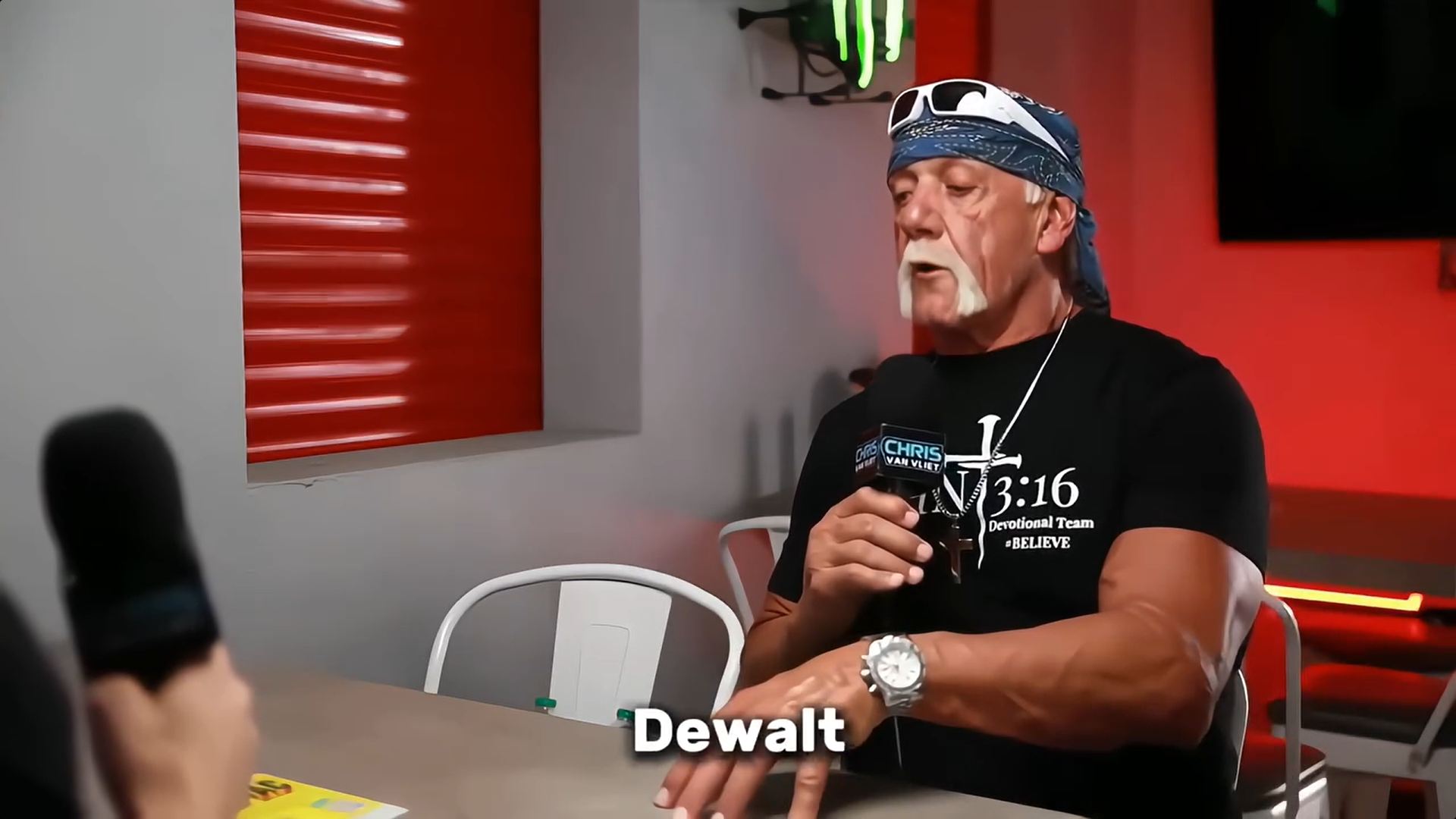

1. The bulk of the fraudulent content discovered used AI voice generators to clone the voices of well-known celebrities, including Elon Musk, Jennifer Aniston, Oprah, Mr. Beast, Tiger Woods, Kylie Jenner, Hulk Hogan and Vin Diesel.

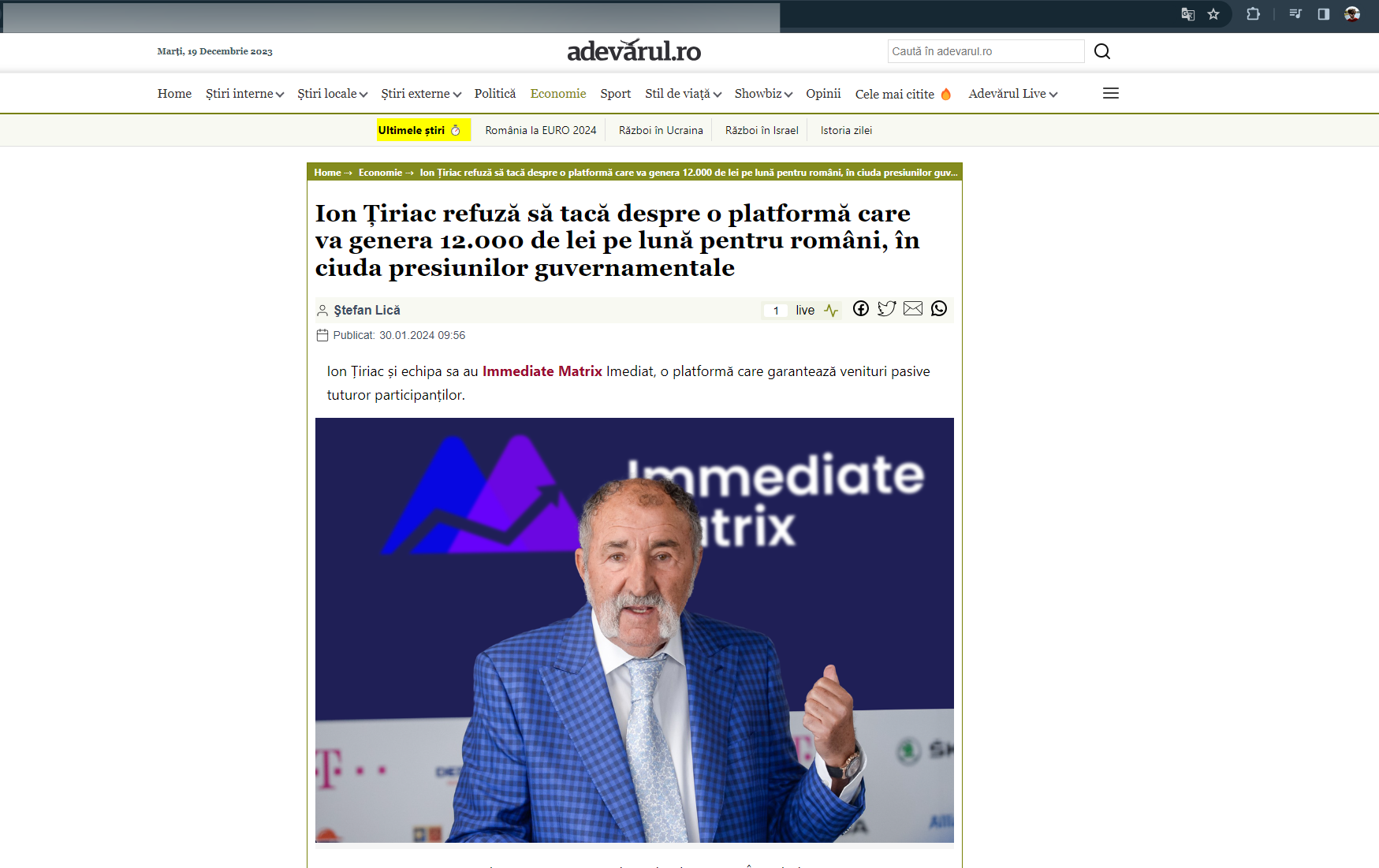

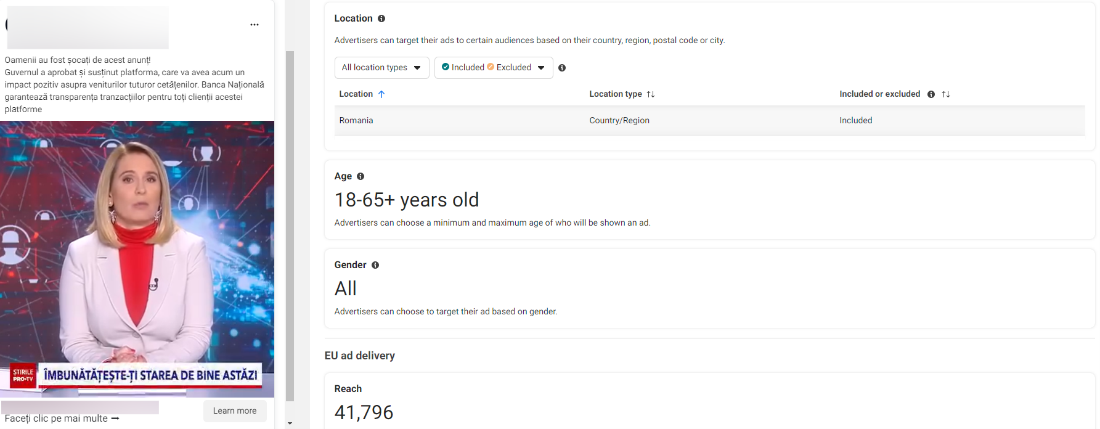

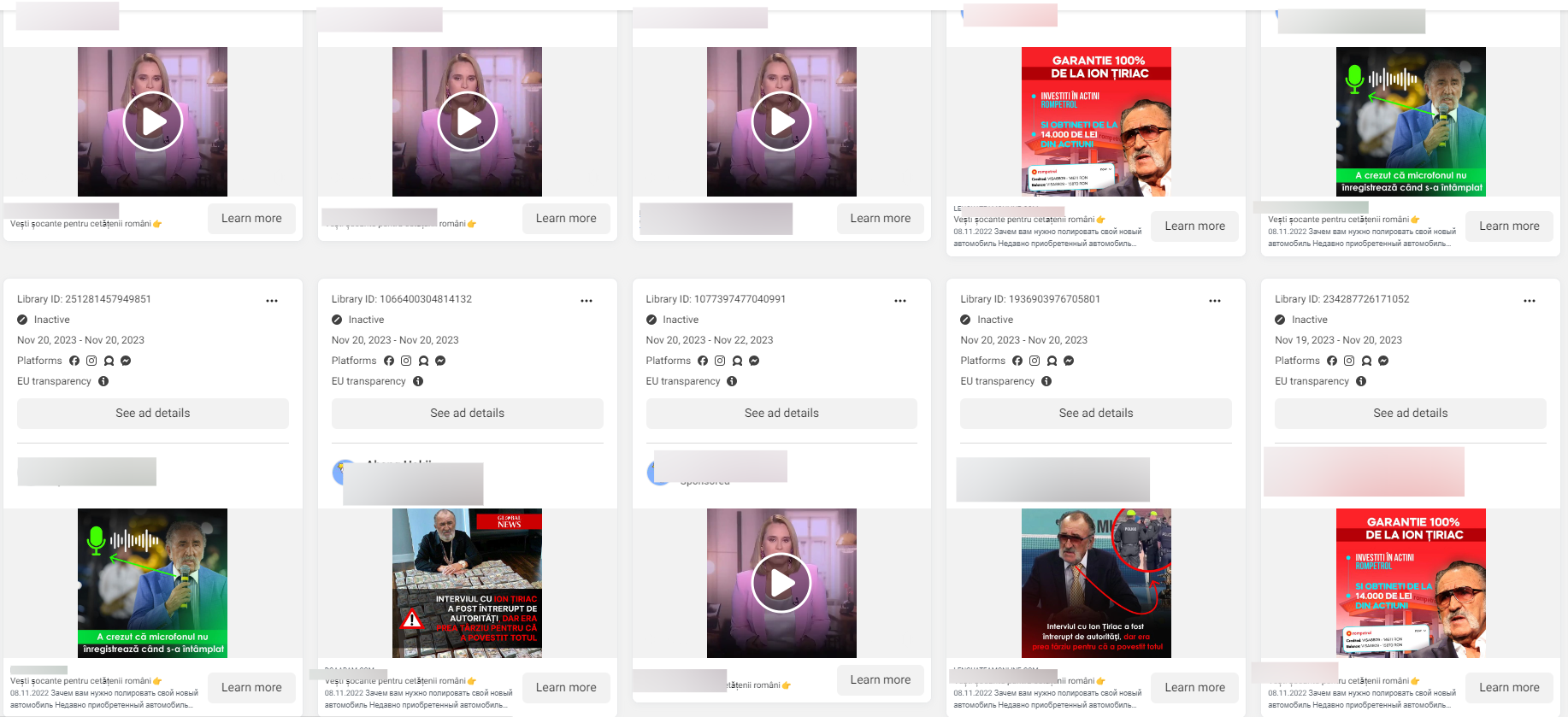

2. Scam ads designed for Romanian targets used stolen videos with the synthetically generated voices of famous news anchorwoman Andreea Esca, tennis player Simona Halep, President Klaus Iohannis, Prime Minister Marcel Ciolacu, governor of the National Bank of Romania Mugur Isarescu and businessman Gigi Becali and Ion Țiriac.

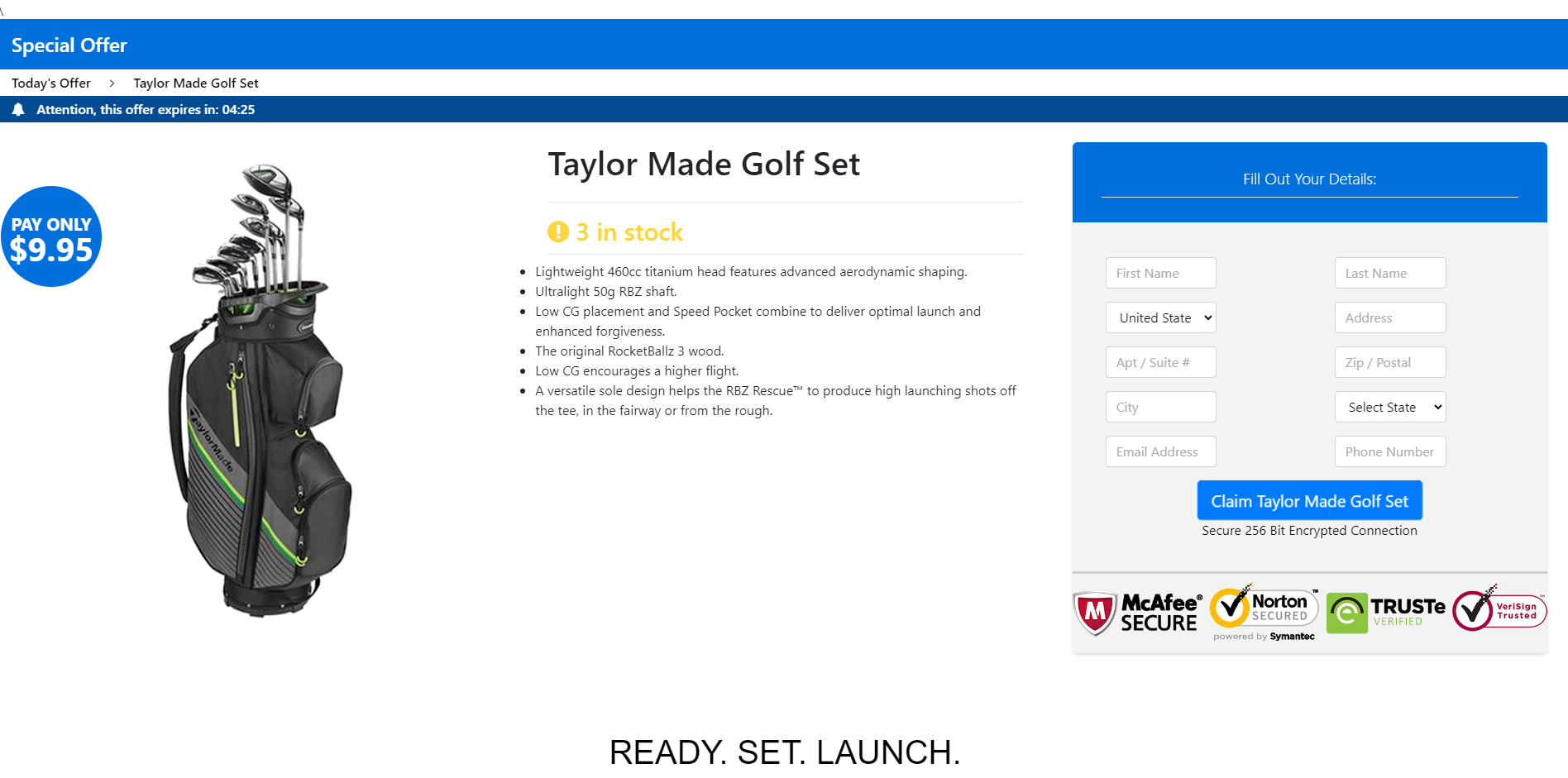

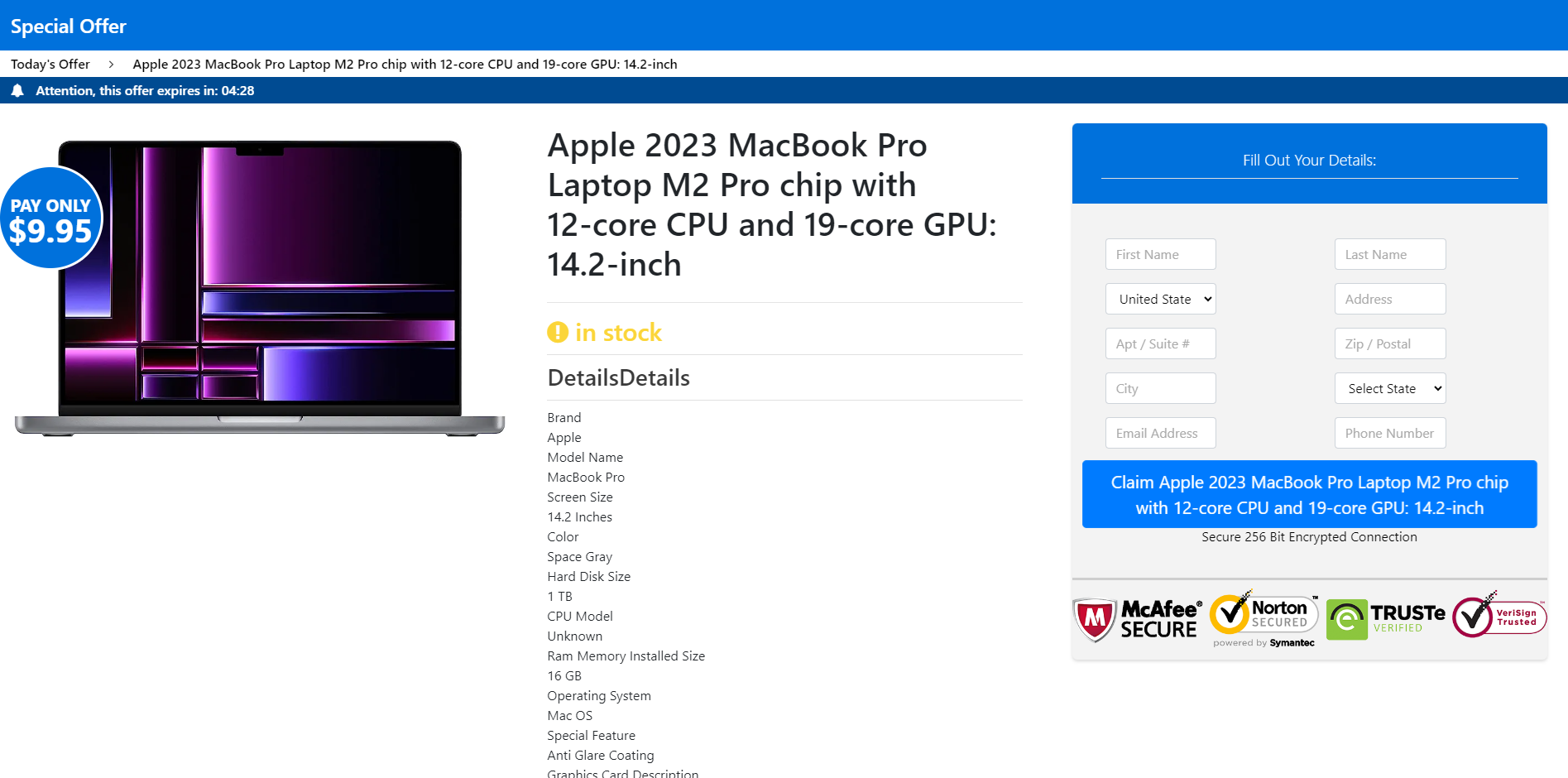

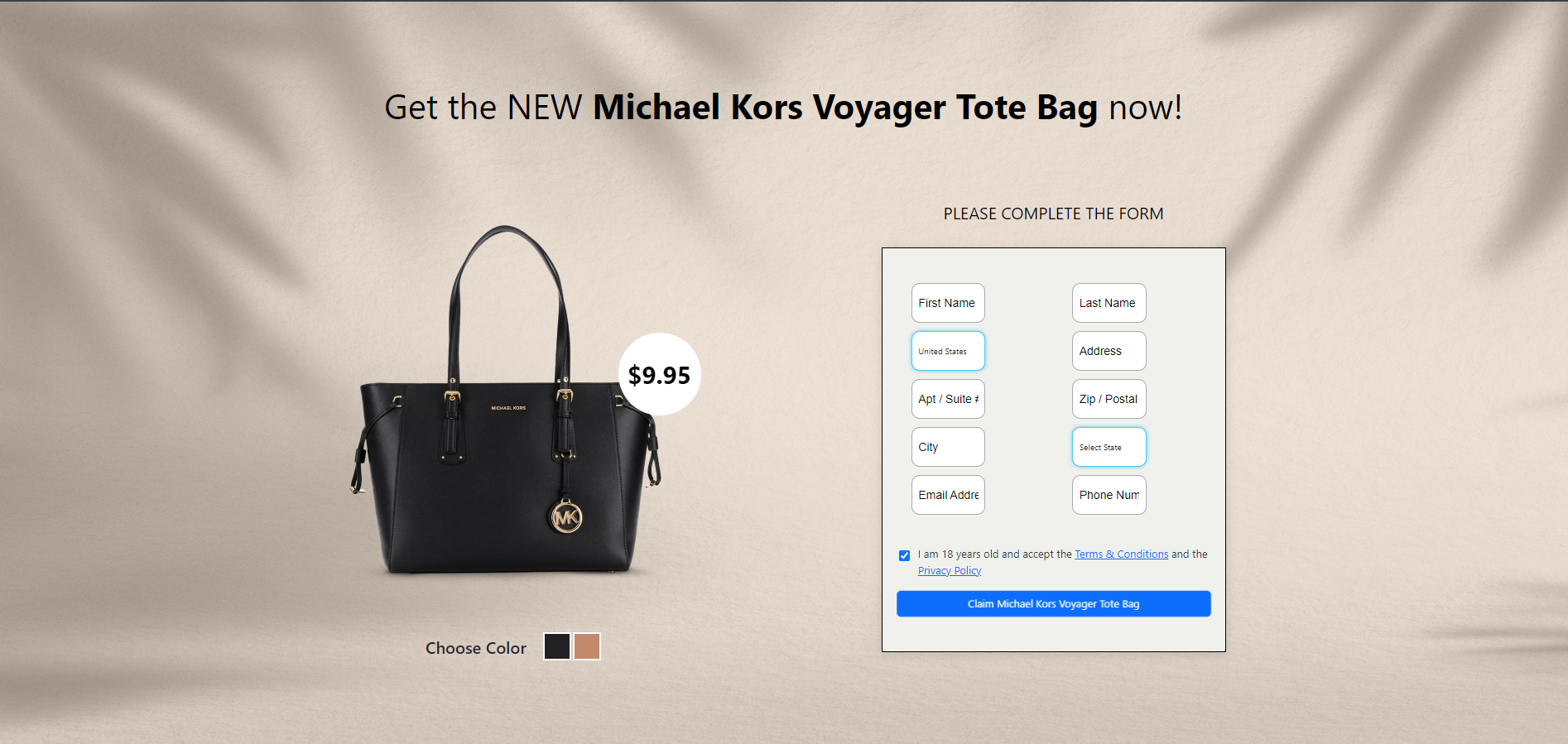

3. The scams used the image of these public figures to advance various giveaways of expensive goods such as the latest iPhone model, MacBooks, Chicco car seats, DeWalt tool sets, Dyson Vacuum, and Michael Kors or Coach bags for just $2 to $15 shipping costs.

4. Other popular topics of the voice cloning scams we discovered were investments and gambling.

5. The fraudulent videos use the same M.O. referencing limited availability for the first lucky 100/1000/10,000 users who act quickly to get their prize or fivefold returns on their investments.

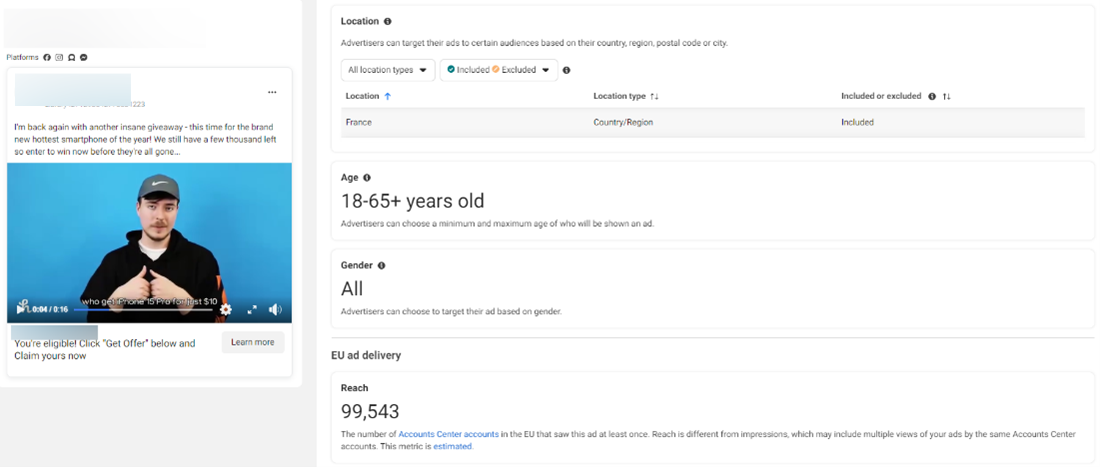

6. These voice-cloning scams reach consumers through fraudulent Facebook ads that redirect them to scam websites promoting either giveaways or investment opportunities.

7. For some individuals, clicking the ads redirects to a domain that displays different pages based on the use of query parameters. If the query parameters set by Facebook during redirection are not present, the users are redirected to a harmless page. This behavior could be used to deter sandbox analysis of the domain. If the query parameters are present, however, the users are presented with the scam page.

8. Most audio deepfakes and videos we analyzed are poorly executed, with some highly visible artifacts and visual abnormalities that are not present in real videos, including distorted images and mismatched lip movements.

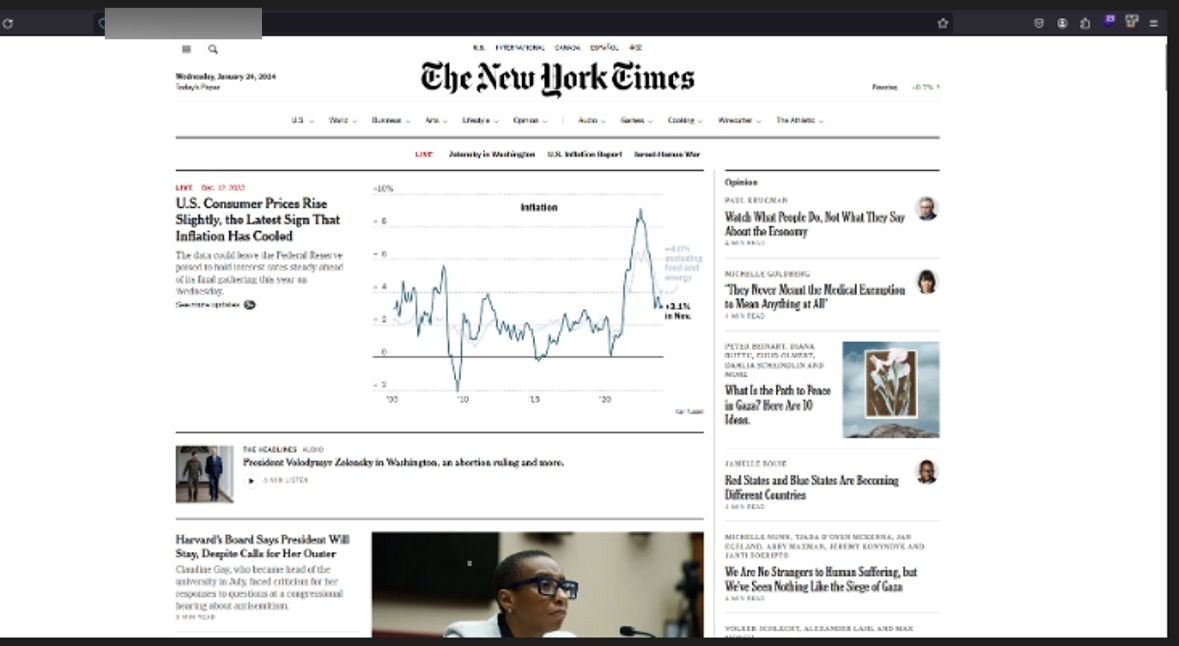

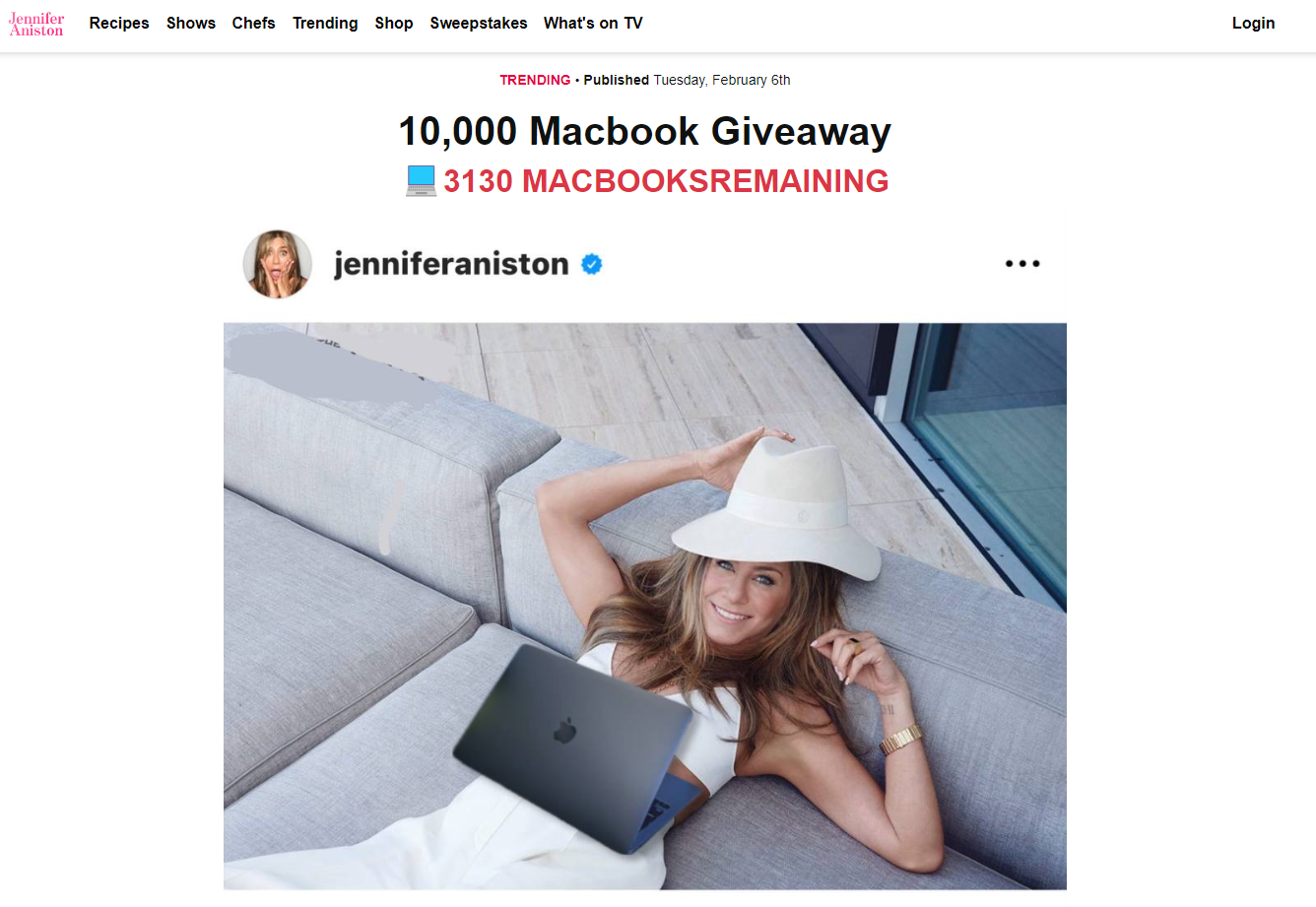

9. To add credibility to their schemes, fraudsters also created lookalike websites of popular news outlets. Specifically, the ads created for Romanian consumers were linked to a cloned version of Digi24, Libertatea, and Adevarul, while other internationally focused ads directed users to a fake version of the New York Times website.

10. Our ad reach analysis (based solely on a small batch of fraudulent ads) estimates that these voice cloning scams targeted at least 1 million US and European users, including in Romania, France, Austria, Belgium, Portugal, Spain, Poland, Sweden, Denmark, Cyprus, and the Netherlands. One of the ads reached 100,000 users and had been active for a few days. However, voice cloning scams are not limited to the geographical zones mentioned above. The phenomenon is most likely global and tailored to consumer trends.

11. The fraudulent ads are on the following Meta platforms: Facebook, Instagram, Audience Network and Messenger.

12. The most impacted demographics are users between 18 and 65 years of age.

How celebrity voice cloning scams play out on Facebook

Over the past three weeks, Meta’s social platforms have been bombarded with fraudulent posts featuring celebrity-endorsed giveaways that promise access to exclusive products and services. These scams, which are increasingly common, exploit users’ natural trust and attraction towards the life of the rich and famous.

We all know celebrities are very good at selling things to the public. Thus, exposure to apparent celebrity-endorsed ads on social media is more likely to succeed in duping unwary internet users.

Consumers in the US and Europe have been exposed to various audio deep-faked ads of well-known celebrities, including Jennifer Aniston, Oprah, and Mr. Beast that promise “free” giveaways to a lucky batch of viewers.

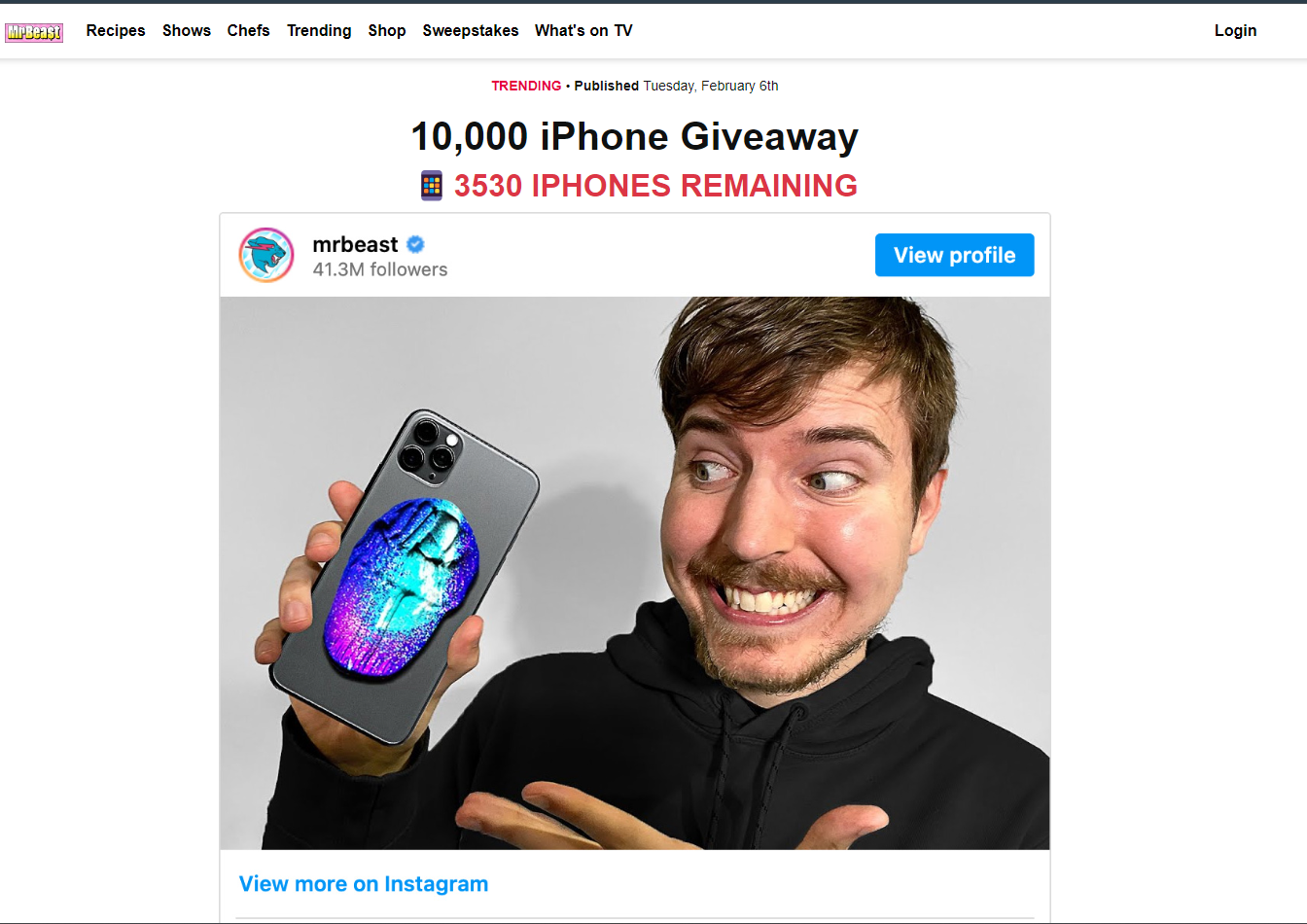

All the deepfake videos we analyzed tell the same bogus story: everyone who is watching is part of an “exclusive group of 10,000 individuals” who can acquire exclusive products for a modest payment starting from $2 (depending on the ad/product). In the screenshot below, scammers cloned the voice of YouTuber Mr. Beast, whose charitable spirit skyrocketed him to internet stardom. They also made a good synchronization when tweaking the video, this being one of the more compelling and well-orchestrated audio deepfakes we’ve seen in the campaign to date.

Ad videos are less than 30 seconds long and urge viewers to act quickly and click on a link to benefit from an “incredible opportunity immediately.”

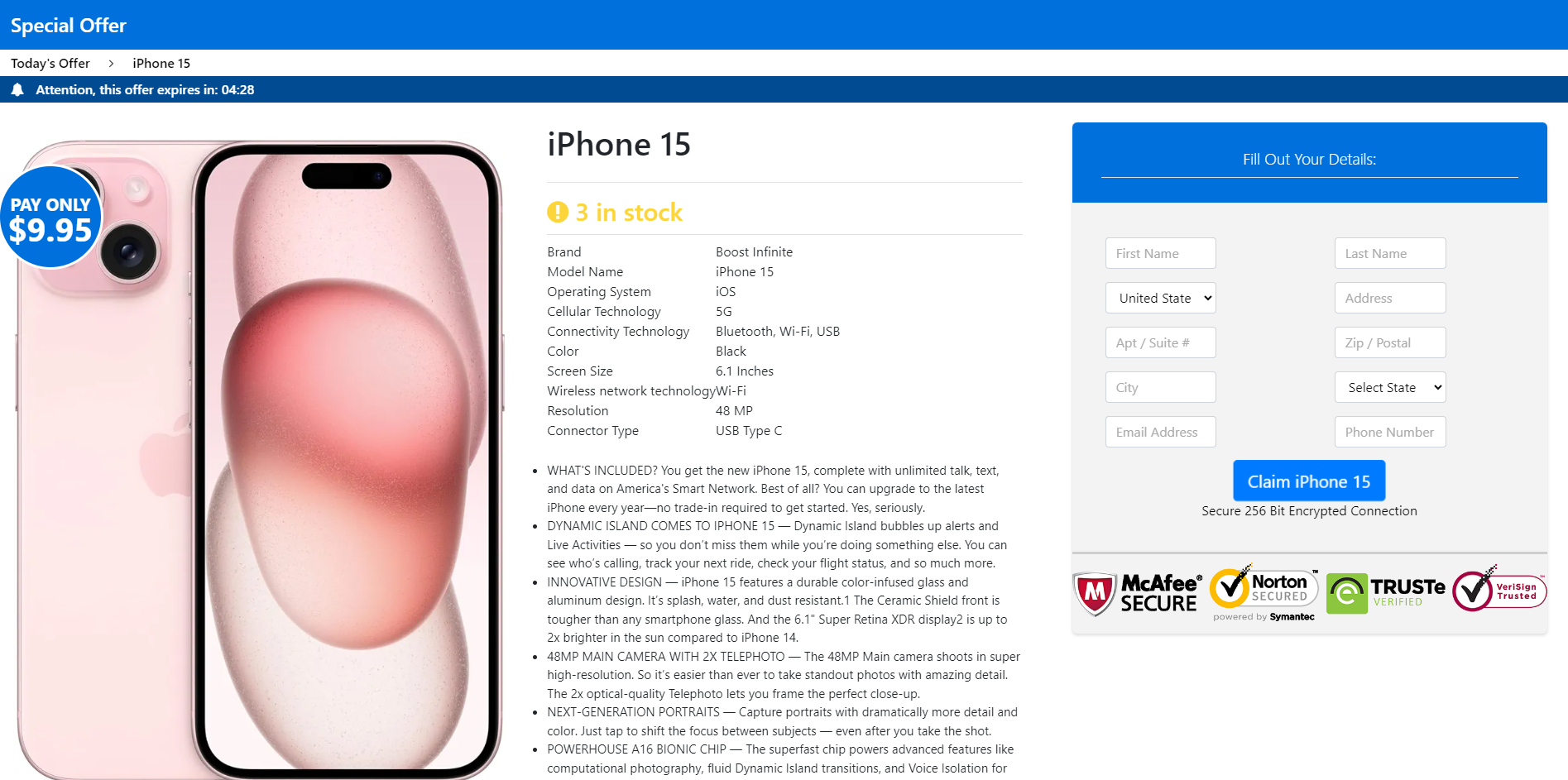

So what’s the catch? Well, lucky people only pay for shipping on the “world’s largest iPhone 15 giveaway.” Sounds far-fetched? That’s because it is.

Upon clicking the fraudulent link, users are taken to a fake website promoting the giveaway that lists a limited number of devices/products remaining.

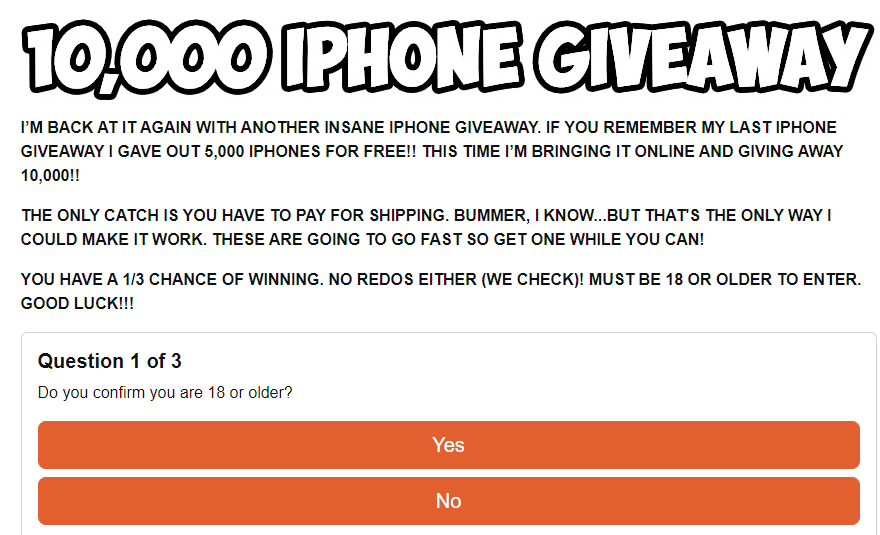

They then need to fill out a bogus survey.

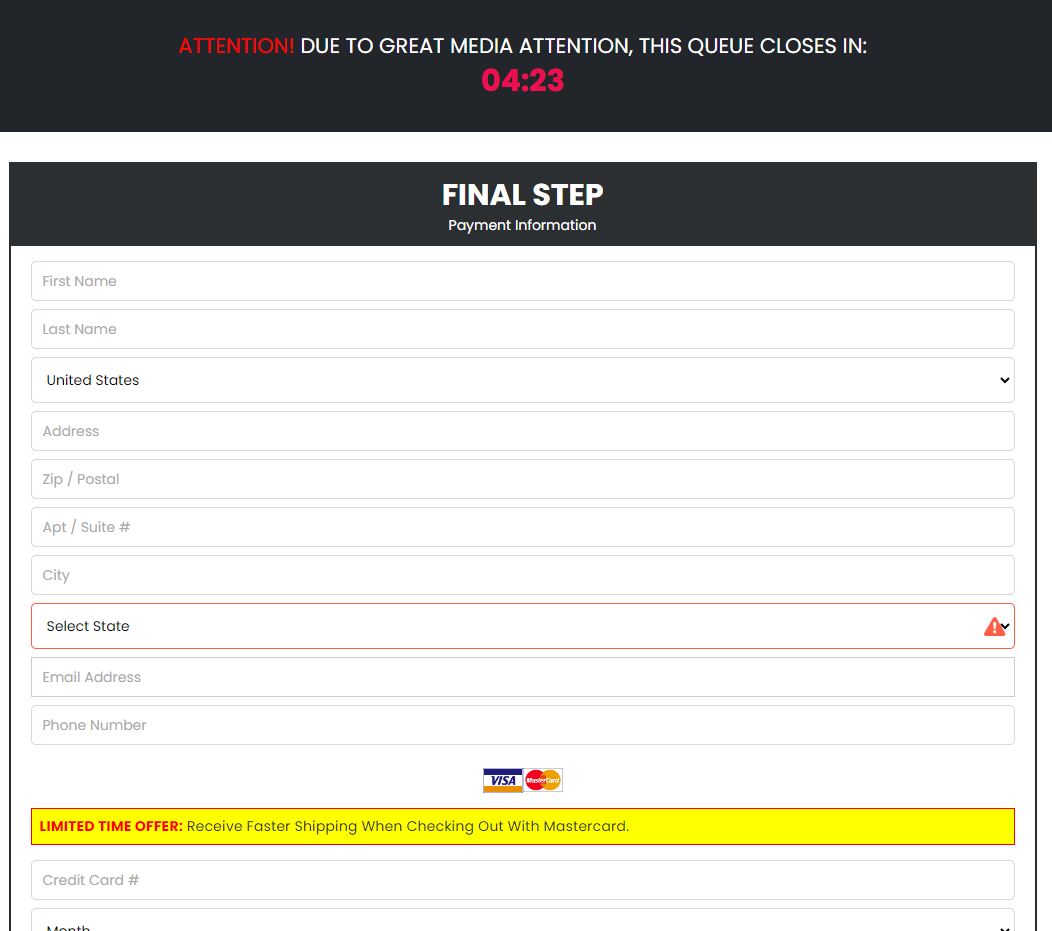

The last page is where the user must fill out details and pay for shipping. Payment is higher than the amount specified in the video. Of course, the user must provide various personal details before proceeding to checkout, including name, country, address, and contact information (email and phone number).

During checkout, users must provide their credit card numbers to pay for shipping.

Other screenshots from the fraudulent deepfake ads can be seen below:

Voice cloning scams closer to home

Romanians were also targeted by threat actors with dedicated investment scams that also employed the use of voice cloning to impersonate various public figures and politicians.

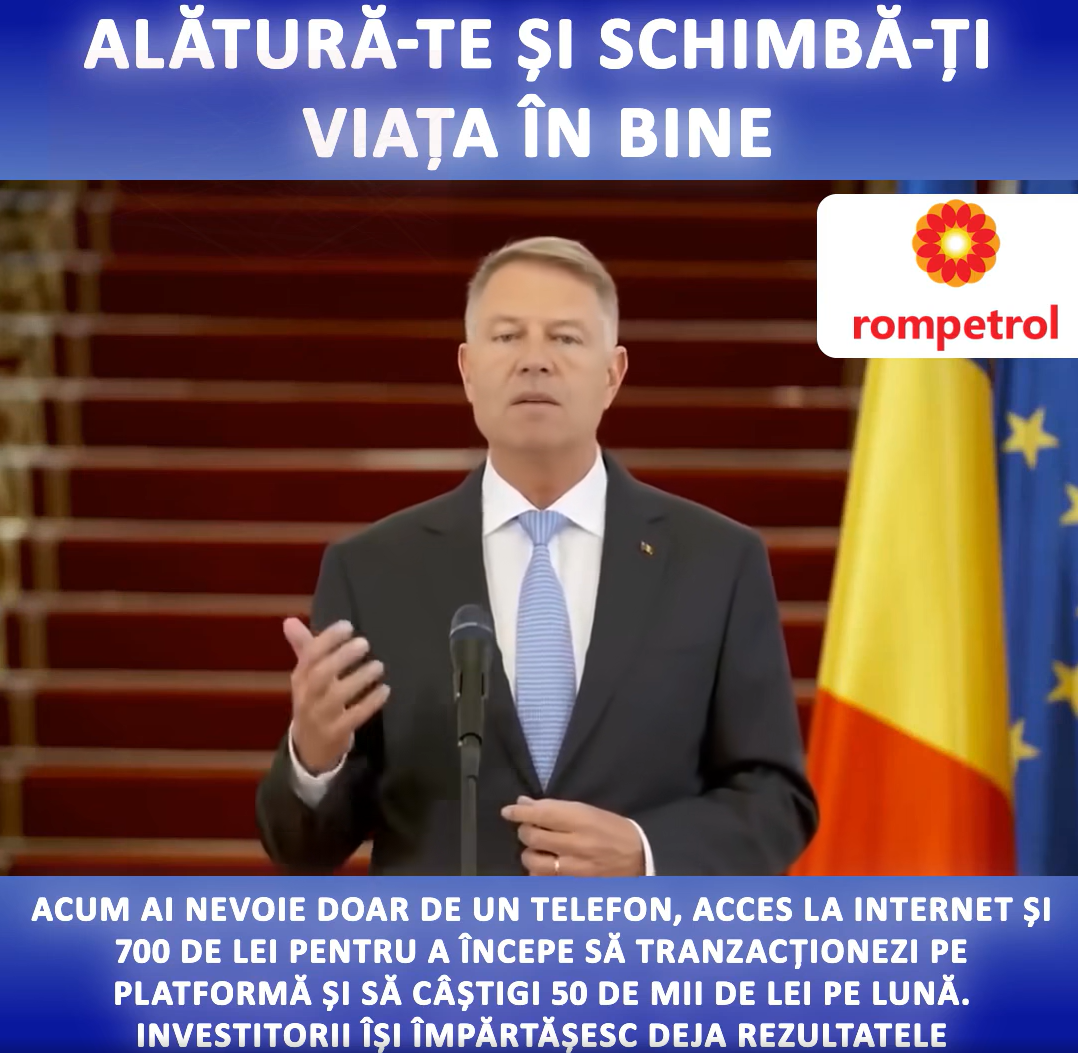

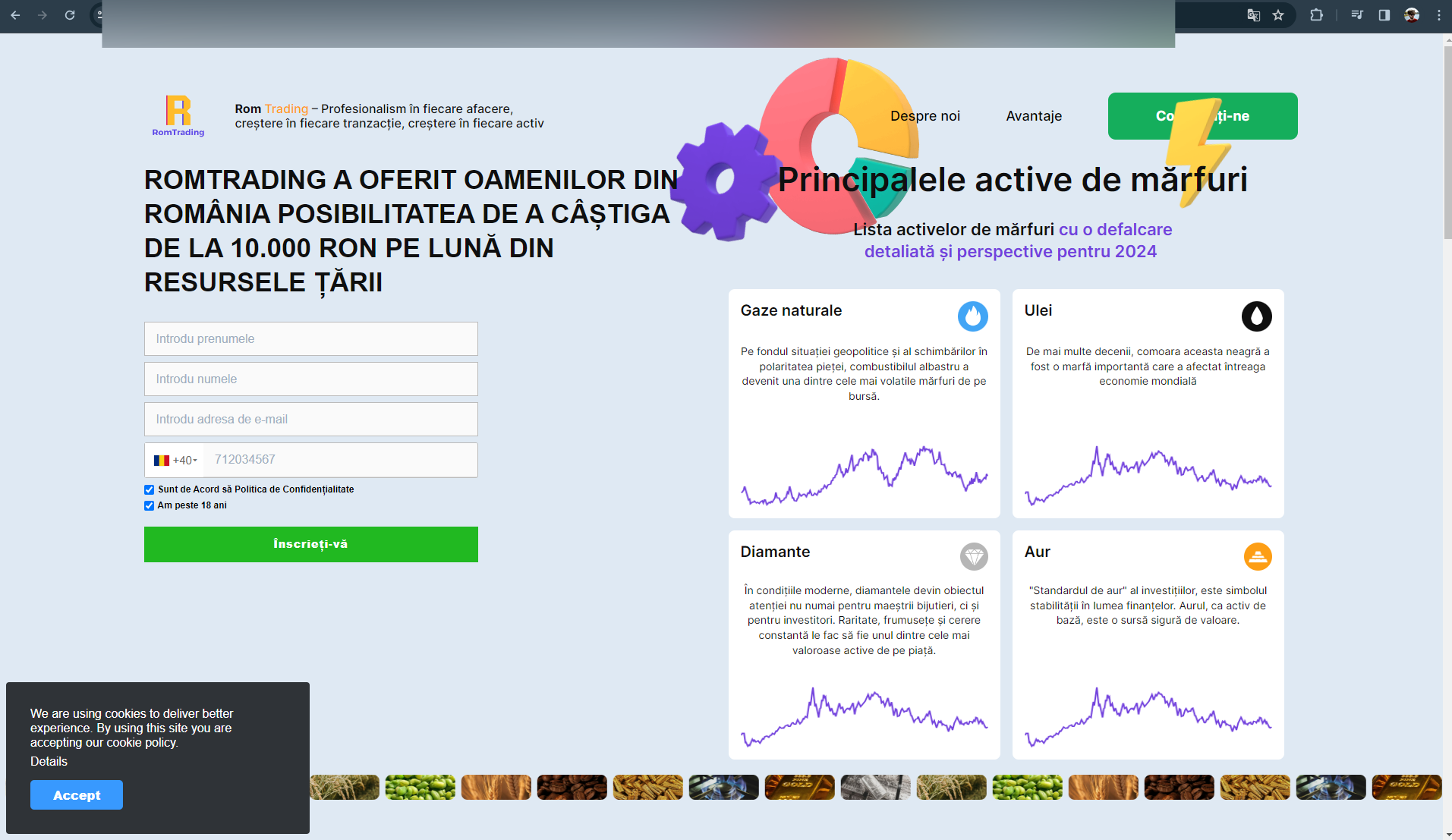

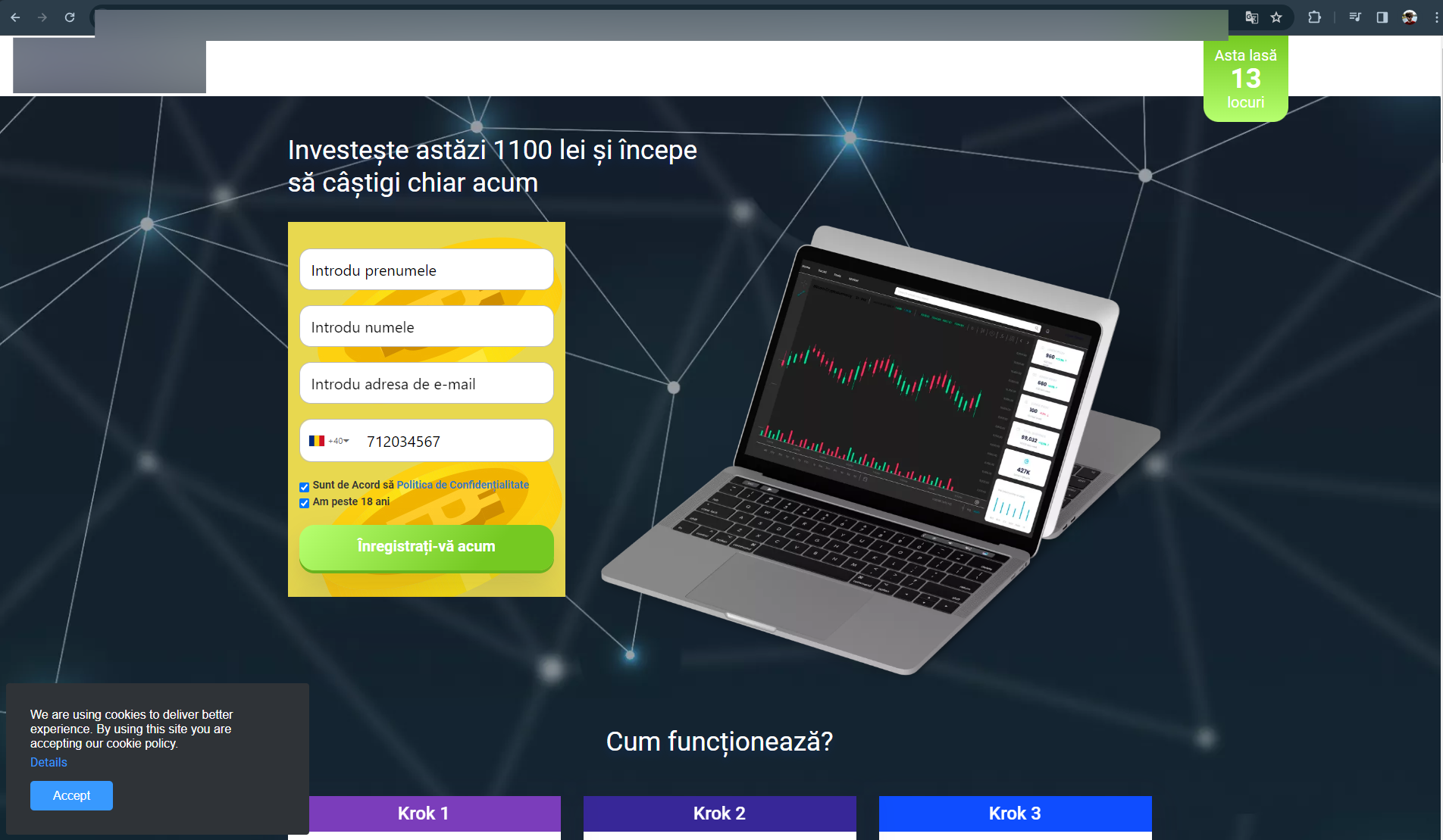

From professional tennis player Simona Halep to President Klaus Iohannis, scammers have flooded Facebook with fake ads promoting a government-endorsed investment platform that can bring users many benefits.

The videos also claim that any earnings on the platform are classified as nontaxable and guaranteed by the Romanian Government and the National Bank of Romania. Some of the fraudulent investment opportunities were tied to big company names such as Rompetrol and Hidroelectrica.

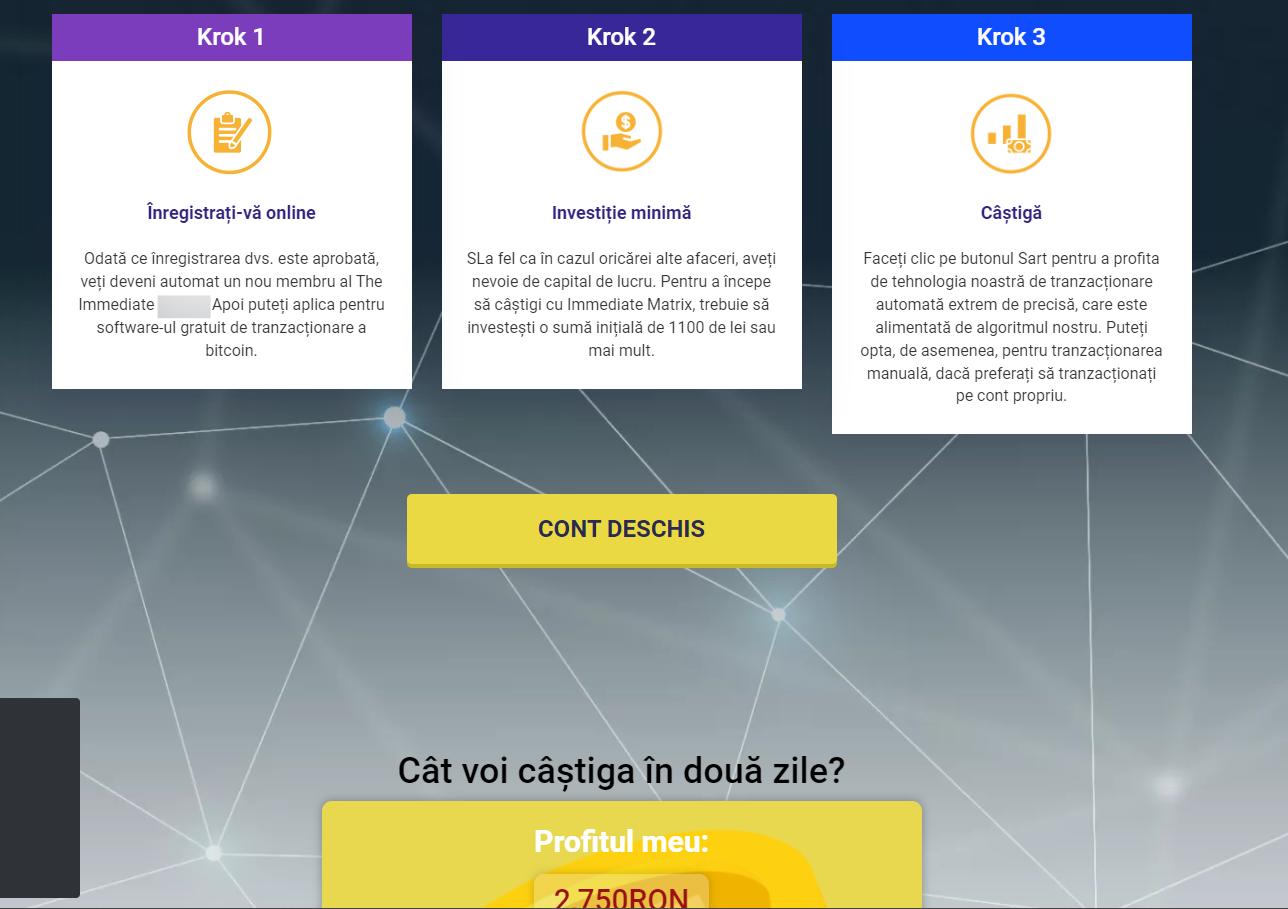

The minimum investment for the bogus platform begins at 700 RON (about $150), with potential earnings of 2,750 RON ($540) in as little as two days.

To register with the so-called government-endorsed investment platforms, Romanian users need to fill out their name and email address and provide their phone number.

There are no requests for credit card numbers or any other payment on the fraudulent websites, with scammers most likely contacting victims via WhatsApp and email to siphon more personal information and steal their money.

How can you protect against deep fake audio scams?

Detecting voice cloning can be very challenging, especially since the advancements in artificial intelligence and deep learning technologies have significantly enhanced the quality of synthetically created voices.

However, there are several tips you can employ to help detect and protect yourself against voice cloning:

1. Check the quality and consistency of the voice: Pay attention to the quality of the voice. Some cloned voices might have unusual tones, be static, or present inconsistencies in speech patterns.

2. Background noise and artifacts: While high-quality voice cloning minimizes background noises, these could still be present in a synthetic audio clip/ video. Carefully listen for unusual background noise or digital artifacts.

3. Be wary of unusual requests and too-good-to-be-true deals: Use caution if the caller is pressing for personal information or money, or making unusual requests. Voice cloning scams are used to steal your money. You should always scrutinize ads and videos promising you huge returns on investments even though they seem endorsed by a celebrity, social media influencer or politician.

4. Verify: If you're suspicious, hang up and contact the person or organization through official channels. Do not use any contact information given to you during the call.

5. Exercise caution when sharing personal info: Be cautious about how much personal information you share online, and avoid sharing voice samples with strangers you meet online. Scammers can use any details from social media to make their impersonation sound very convincing. You can find out the extent of your digital footprint and even sniff out social media impersonators using Bitdefender Digital Identity Protection, our dedicated identity protection service.

6. Use security software on your device: Employ comprehensive security solutions like Bitdefender, which offers various features to protect against phishing and fraud. Although voice clone detection may not be a feature, our solutions protect your money and data from fraudulent attempts proliferated via voice cloning scams.

7. Use dedicated tools to check for scams: You can outsmart scammers by using Bitdefender Scamio, our free next-gen AI chatbot and scam detector, to find out if the online content you interact with or the messages you receive are attempts to scam you.

8. Stay informed: Keep up with the latest security threats and how they work. Our blogs and newsletters help you stay aware of new and existing online scams and give you insights into how you and your family can stay safe.

9. Report: Always report voice cloning scams you come across on social media platforms and notify the police and other relevant bodies of any suspicious behaviors and fraudulent attempts.

tags

Author

With more than 15 years of experience in cyber-security, I manage a team of experts in Risks, Threat Intel, Automation and Big Data Processing.

View all posts

Andrei is a graduate in Automatic Control and Computer Engineering and an enthusiast exploring the field of Cyber Threat Intelligence.

View all posts

I'm a software engineer with a passion for cybersecurity & digital privacy.

View all postsRight now Top posts

Infected Minecraft Mods Lead to Multi-Stage, Multi-Platform Infostealer Malware

June 08, 2023

Vulnerabilities identified in Amazon Fire TV Stick, Insignia FireOS TV Series

May 02, 2023

EyeSpy - Iranian Spyware Delivered in VPN Installers

January 11, 2023

Bitdefender Partnership with Law Enforcement Yields MegaCortex Decryptor

January 05, 2023

FOLLOW US ON SOCIAL MEDIA

You might also like

Bookmarks